Diana(Yiyang) Wang Portfolio

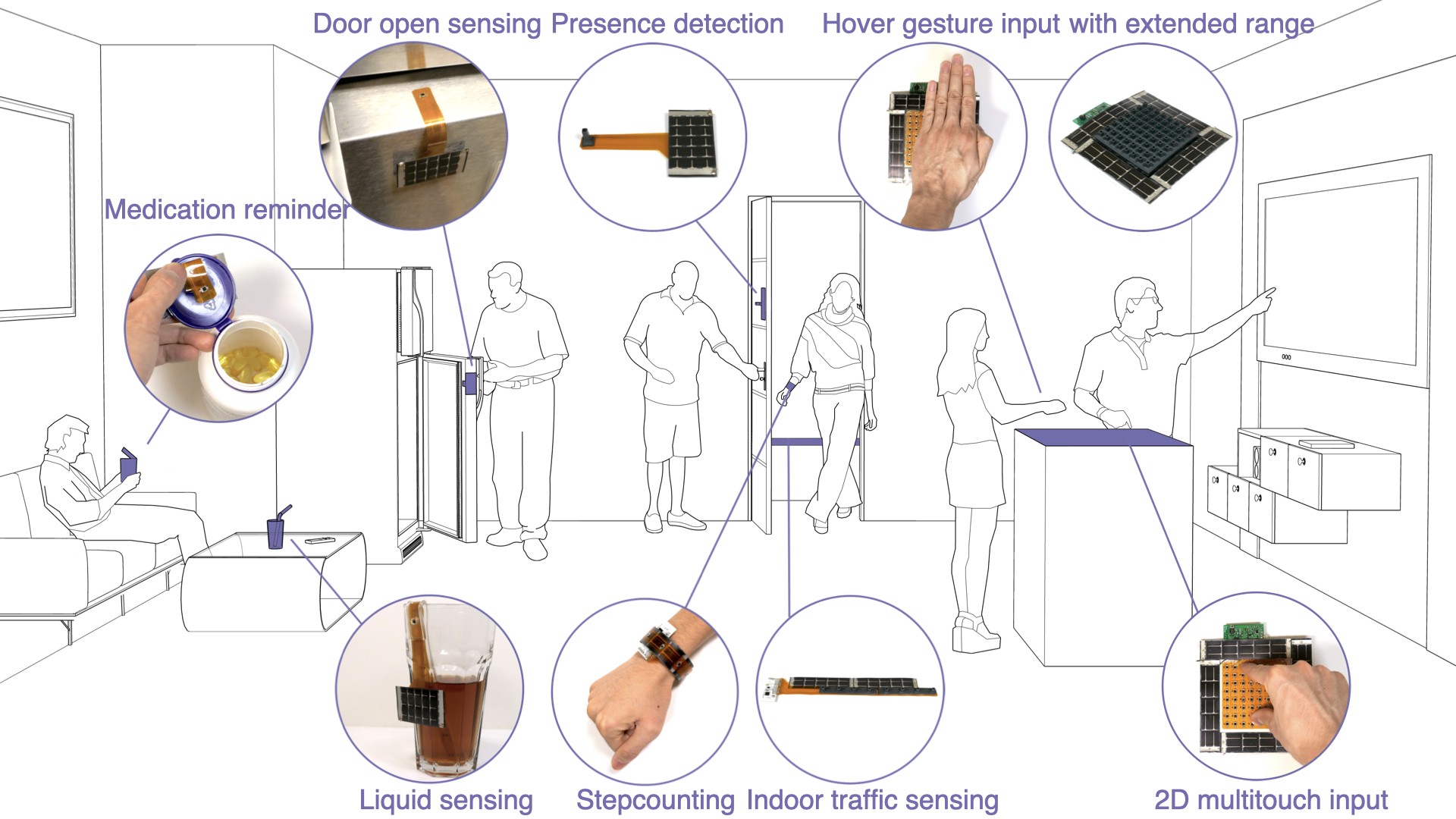

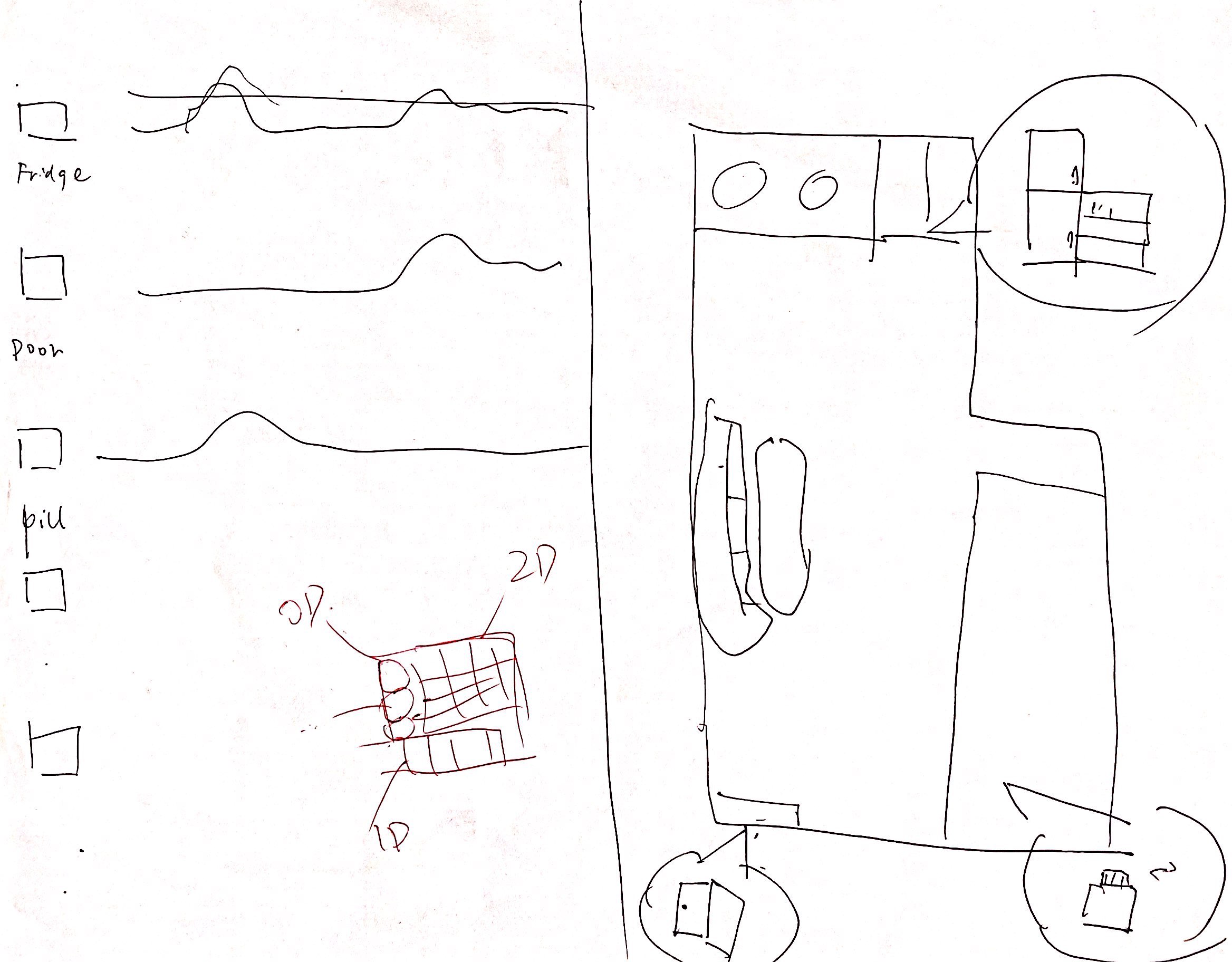

In this project, my main contribution lies in designing and implementing user interfaces for optical sensors developed by the Georgia Tech Ubicomp Lab. To support multiple real-world applications, the system first identifies the optical signal patterns and then sets a customized threshold to categorize the state of devices or user actions. In our study, we supported implicit activity sensing in applications with varying sensing dimensions (0D, 1D, 2D), fields of view (wide, narrow), and perspectives (egocentric, allocentric). Example applications include object use (door open/close, drawer open/close, medicine bottle open/close), indoor step counting, liquid sensing (tea/coffee liquid level in a mug), and multitouch input (hover and touch).

One of the main goals of ubiquitous computing is to sense implicit and explicit inputs from users to the devices embedded in the environment. However, the existing solutions with cameras could invade people's privacy. Additionally, many battery-powered sensors require maintenance, making ubiquitous deployment hard. Therefore, we presented OptoSense, a general-purpose and self-powered sensing system that leverages ambient light signals to infer user behaviors and actions.

Our work resulted in a published paper in The Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT) and was awarded the IMWUT Distinguished Paper Award.

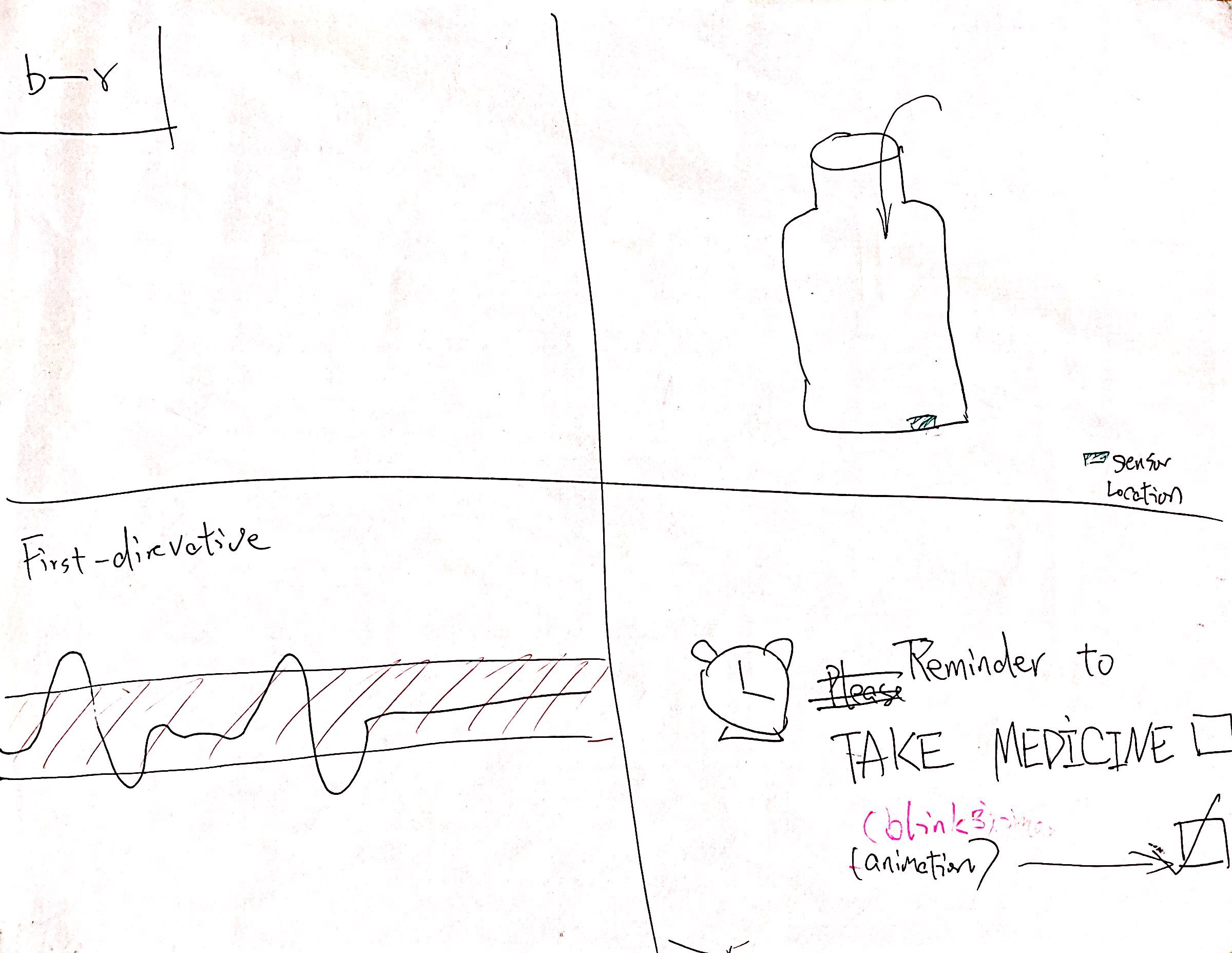

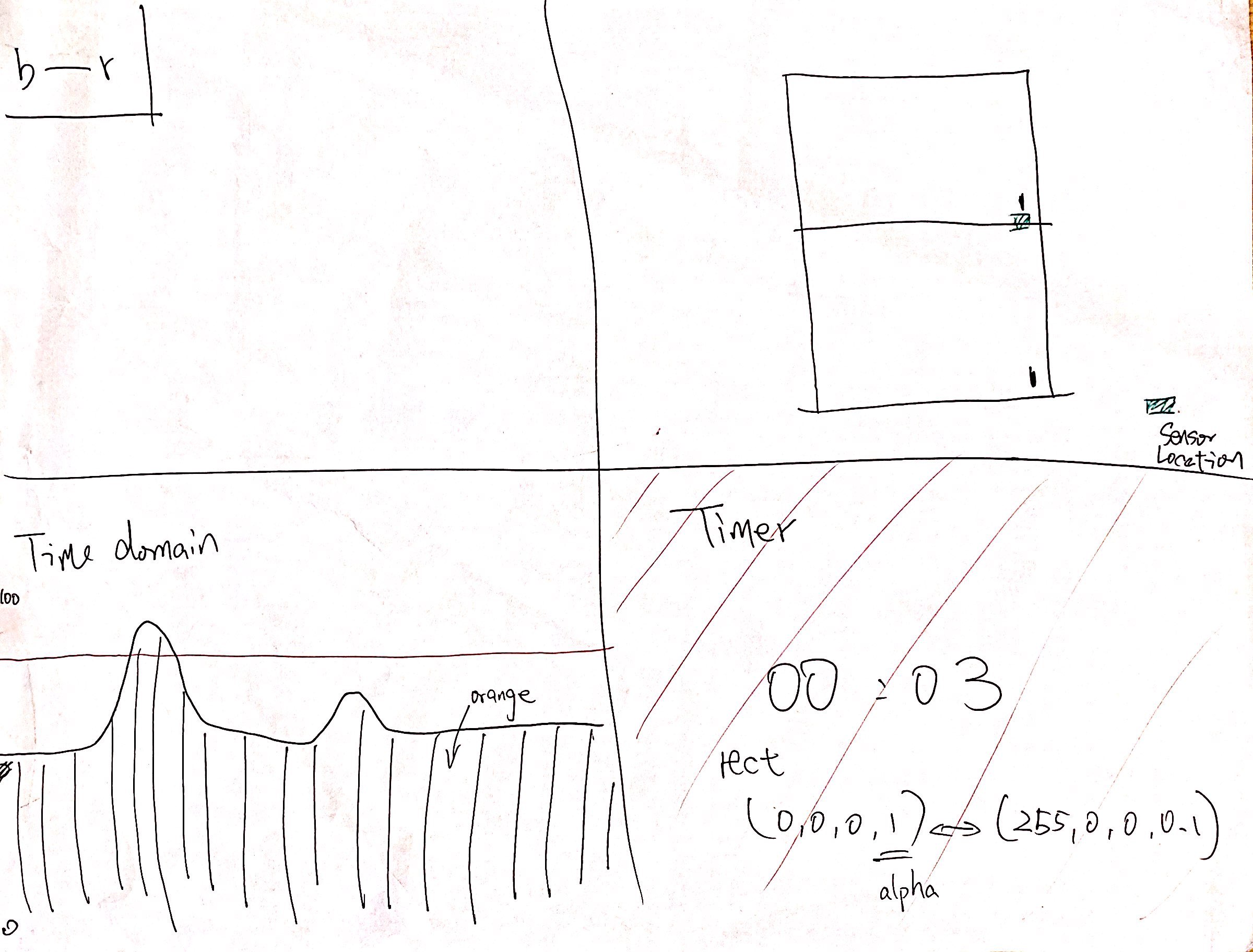

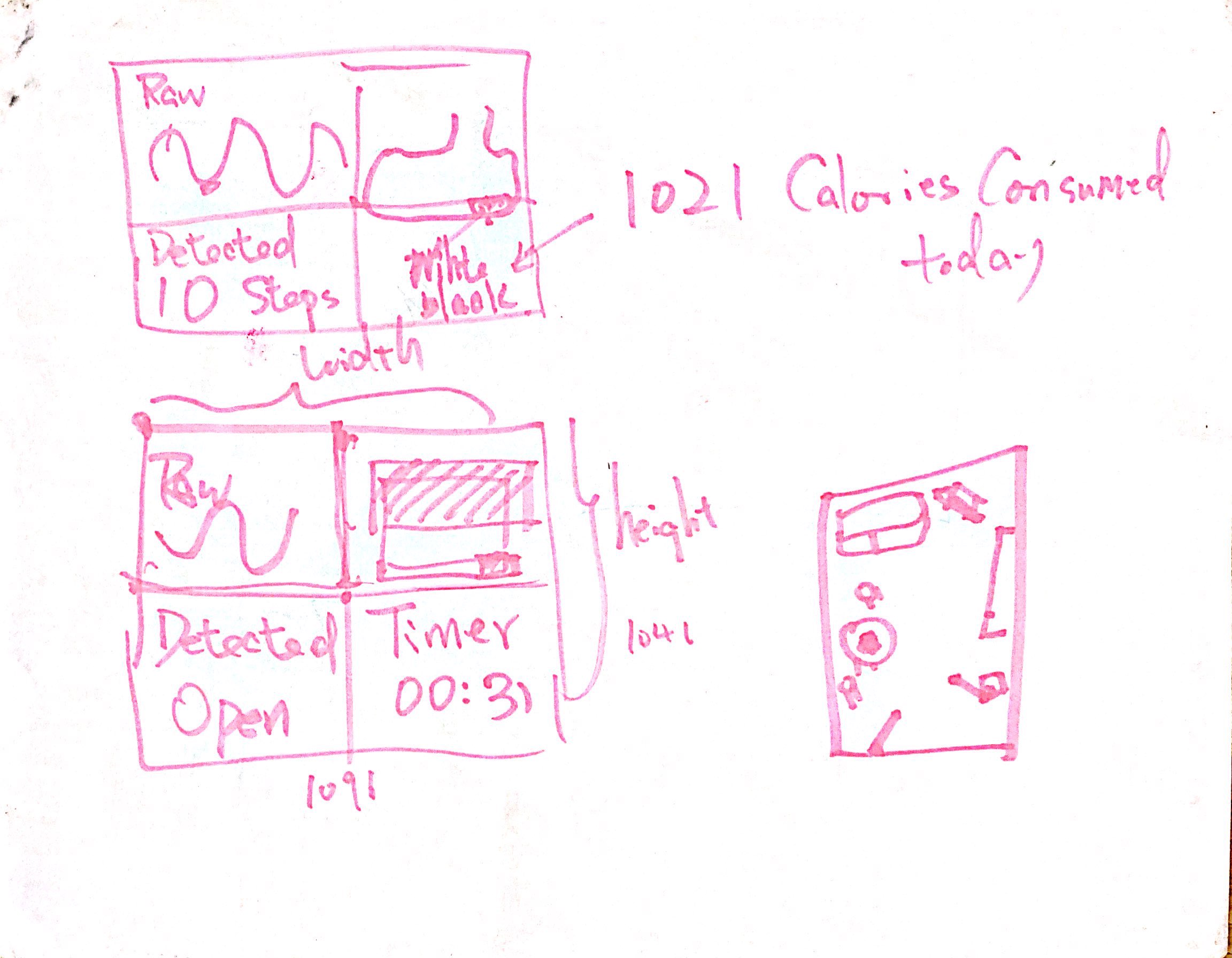

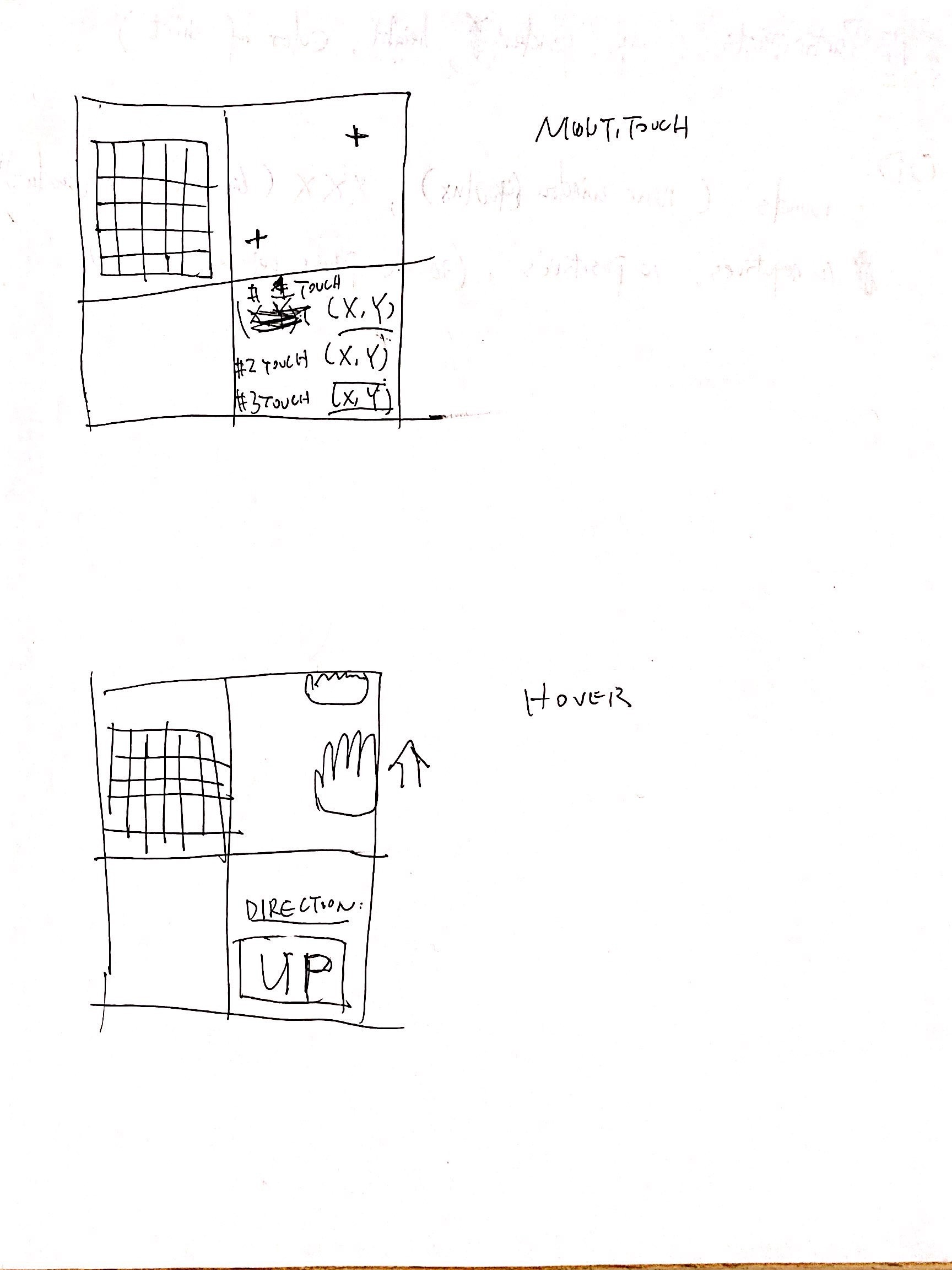

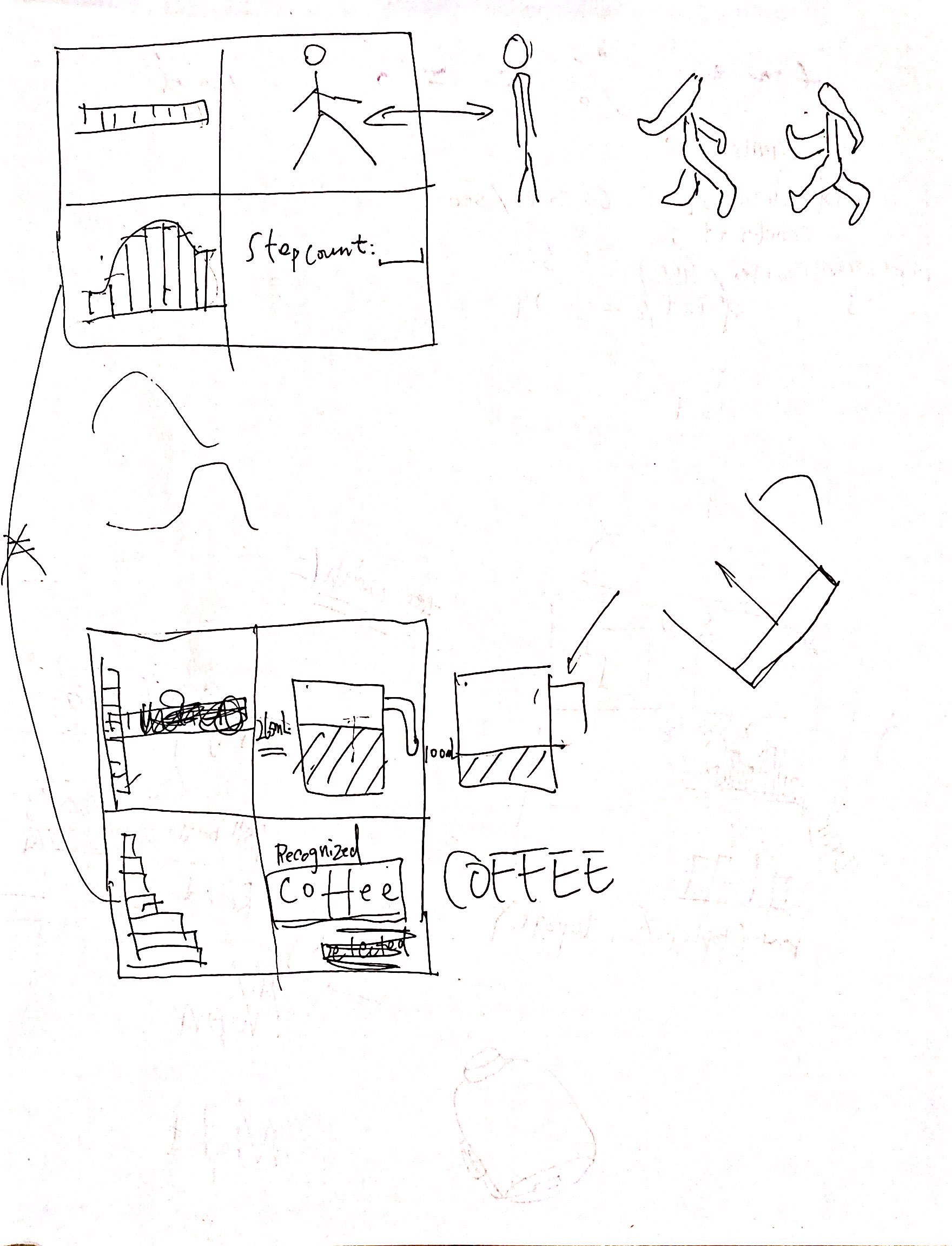

Because the user interfaces are mostly for demo purposes to engineers through the lens of a real-world application, I wanted to design user interfaces that can both help engineers understand the raw signals coming from our OptoSense sensors and to show how customers can leverage our system in various applications such as smart home applications. Thus, I allocated the left half of the screen for engineers by showing the raw signals through a plot and visualizing how much ambient light each sensor senses through varying colors. For the right half of the screen, I designed a simple animation for our infer of what is happening in the real world and a text explanation for that.

I started to design our user interfaces by stretching on paper. Then I created the user interfaces through code on Processing. The final user interfaces work both synchronously (integrated with sensors in real time) and asynchronously through pre-recorded values.

OptoSense 2020

Atlanta